This post discusses more involved topics in VTK, each which address a use-case for the 3D environment – complex models, terrains, and sensor visualizations. Two different types of sensor visualizations are incorporated into the project – a video feed screen and a LIDAR scanner point-cloud. The full project code is provided as a download link just below (and is set up to run outside Eclipse if needed).

The last post discussed how the base environment is set up in VTK, i.e. how to create a window and a renderer, build a few primitives, and include them in the world. This will be used to build a complete ‘Bot’ class for the environment.

The code for this post can be found here. As the project grows bigger, it’s becoming difficult to copy complete files into the blog. So from this post on only snippets will be discussed to save virtual paper.

Each topic discussed in this post is almost an independent question. The following topics are discussed:

- I have primitive models (spheres, cylinders, etc.) but I want to import something complex, e.g. from Blender or 3DS Max, how do I do that?

- How do I assign a texture to the model?

- How do I include a terrain (a.k.a. a height-map) for my environment?

- How do I visualize sensor information from the bot? The following are used as examples:

- A video feed screen for the bot

- A LIDAR feed for the bot

Importing Complex Models

In a variety of projects you might need to include complex models for props or data sources. In the case of props, these can be loaded in Blender or 3DS Max and exported in a suitable file format (which is STL and OBJ formats for this project).

This section looks at how to load these models are add them to the visualization environment.

To load a model from a file, you will need to:

- Export the file to a importable file format:

- There are a variety of different file formats that can be loaded – most of which are described in the ParaView Users Guide. In general I’ve been working with Stereo Lithography (*.STL) and WaveFront (*.OBJ) formats because they seem to be easily imported

- In terms of finding models, you can file a large selection of free models on TurboSquid in common file formats

- You can use Blender (free), MilkShape (cheap) or 3DS Max (cheap… if you drive a Veyron) to export your file to a readable format

- For this project, we’ve set up ‘up and forward’ as unit-y and unit-z respectively, so before you export you might want to rotate your model to face up along the Y axis and forward along the Z axis

- Lastly, check that your model is situated at the origin before exporting, otherwise it will have a strange offset which it will rotate around

- Create a new Python module/class that inherits from the ‘SceneObject’ class

- In the constructor (__init__() method):

- Create the specific reader to read the file. In this project we’re using either OBJ or STL files, so the two potential readers are ‘vtkOBJReader‘ and ‘vtkSTLReader‘ respectively. If you are using something different, you will need to find your reader in the VTK class documentation

- Point the reader to your file

- Rotate/scale the model with a ‘vtkTransform’ in the event that we exported it incorrectly (…which happens every single time, for me anyway)

- Create a mapper for the model

- Assign the mapper to the existing ‘vtkActor’ from the inherited ‘SceneObject’ class

- The inputs and outputs of each class are wired up in the following way: Reader -> Transform -> Mapper -> Actor

- The model will then draw and move around if we use the position setters and getters as we discussed in the previous post

For this portion of the project, we will use this set of steps to add a Bot model into the scene (‘Bot.py’ in the scene folder). This model was downloaded from TF3DM and cleaned up in Blender, but it was oriented incorrectly so the transform was useful.

A quick discussion on the steps in ‘Bot.py’:

- Create a bot class that inherits from the ‘SceneObject.py’ module:

class Bot(SceneObject):

'''

A template for loading complex models.

'''

- Set the filename to the location of the model file on your hard-drive, which can be found in the media folder in the scene directory, and create a reader for the file:

# Call the parent constructor

super(Bot,self).__init__(renderer)

filename = "../scene/media/bot.stl"

reader = vtk.vtkSTLReader()

reader.SetFileName(filename)

- Create a transform, set the rotation so that the bot points forward along the Z-axis, and bind the output of the reader to the input of the transform:

# Do the internal transforms to make it look along unit-z, do it with a quick transform

trans = vtk.vtkTransform()

trans.RotateZ(90)

transF = vtk.vtkTransformPolyDataFilter()

transF.SetInputConnection(reader.GetOutputPort())

transF.SetTransform(trans)

- Create a mapper, set the mapper’s input to the output of the transform, and set the ‘SceneObject’ actor to use the final mapper:

# Create a mapper

self.__mapper = vtk.vtkPolyDataMapper()

self.__mapper.SetInputConnection(transF.GetOutputPort())

self.vtkActor.SetMapper(self.__mapper)

- Call a (currently empty) method that will add in the children for this model, and reset the model to world’s origin so that the children will update:

# Set up all the children for this model

self.__setupChildren(renderer)

# Set it to [0,0,0] so that it updates all the children

self.SetPositionVec3([0, 0, 0])

- This method is used to add in the video feed and the LIDAR sensor. If you are looking at the final project file, these are filled in – this will be done as we introduce the sensors.. The empty method definition is :

def __setupChildren(self, renderer):

'''

Configure the children for this bot - camera and other sensors.

'''

return

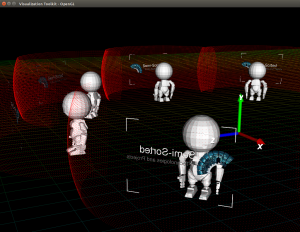

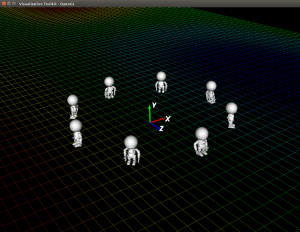

To create it in the main program, you just need to instantiate a bot class with the current renderer (in this case I put a few around in a circle here):

...

# Initialize a set of test bots

numBots = 8

bots = []

for i in xrange(0, numBots):

bot = Bot.Bot(renderer)

# Put the bot in a cool location

location = [10 * cos(i / float(numBots) * 6.242), 0, 10 * sin(i / float(numBots) * 6.242)]

bot.SetPositionVec3(location)

# Make them all look outward

yRot = 90.0 - i / float(numBots) * 360.0

bot.SetOrientationVec3([0, yRot, 0])

bots.append(bot)

....

Adding a Texture

In some instances you might like to add a texture to a UV-mapped model. This isn’t done with the current bot model, but will be used later for the video feed .

- Texturing is pretty straight-forward to do, just add a small piece of code to the init method of the bot class:

textReader = vtk.vtkPNGReader()

textReader.SetFileName("[MY TEXTURE]")

self.texture = vtk.vtkTexture()

self.texture.SetInputConnection(textReader.GetOutputPort())

self.texture.InterpolateOn()

- Lastly the texture is assigned to the actor to apply it:

self.vtkActor.SetTexture(self.texture)

Creating a Terrain

A terrain is the same as a 3D plot of a surface. There is a good example of surface plotting in the VTK examples – ‘expCos.py‘ – and this used to build the terrain in this project.

To create a terrain:

- Create a flat tesselated surface using a plane source

- The source will provide a surface that is aligned to the XY plane, which is facing the screen, so use another transformation filter to set it on the ground (XZ plane)

- Use a warp filter to set the height of each point:

- Create a programmable filter to set scalar values for each height point on the terrain – in this project we use a simple simple circle formula to give some definition to the surface

- You can easily replace this step and load the height map from a texture. Let me know if you want a code snippet for this

- Use a warp filter to deform the terrain to the heights found in the scalar values

- Apply a mapper, which will use the scalar values for colour, and tell it the range of the height so that it colours it correctly

As discussed, you can easily replace the 3rd step by loading the terrain heights from a texture.

The code for this can be found in the ‘Terrain.py’ file in the scene folder. A quick discussion of the implementation:

- The initialization function for the ‘Terrain.py’ class is provided with a size (‘surfaceSize’) for the total width and height of the terrain

- Create the plane source with the specified definition, scale it the ‘surfaceSize’ parameter, and rotate it so that it is a ground plane:

...

# We create a 'surfaceSize' by 'surfaceSize' point plane to sample

plane = vtk.vtkPlaneSource()

plane.SetXResolution(surfaceSize)

plane.SetYResolution(surfaceSize)

# We transform the plane by a factor of 'surfaceSize' on X and Y

transform = vtk.vtkTransform()

transform.Scale(surfaceSize, surfaceSize, 1)

transF = vtk.vtkTransformPolyDataFilter()

transF.SetInputConnection(plane.GetOutputPort())

transF.SetTransform(transform)

- Wire a programmable filter to the output of the transform:

...

# Compute the function that we use for the height generation.

# [Original comment] Note the unusual GetPolyDataInput() & GetOutputPort() methods.

surfaceF = vtk.vtkProgrammableFilter()

surfaceF.SetInputConnection(transF.GetOutputPort())

- Create a function for the programmable filter (an arbitrary circle formula is used here), and assign it to the programmable filter:

# [Original comment] The SetExecuteMethod takes a Python function as an argument

# In here is where all the processing is done.

def surfaceFunction():

input = surfaceF.GetPolyDataInput()

numPts = input.GetNumberOfPoints()

newPts = vtk.vtkPoints()

derivs = vtk.vtkFloatArray()

for i in range(0, numPts):

x = input.GetPoint(i)

x, z = x[:2] # Get the XY plane point, which we'll make an XZ plane point so that's it a ground surface - this is a convenient point to remap it...

# Now do your surface construction here, which we'll just make an arbitrary wavy surface for now.

y = sin(x / float(surfaceSize) * 6.282) * cos(z / float(surfaceSize) * 6.282)

newPts.InsertPoint(i, x, y, z)

derivs.InsertValue(i, y)

surfaceF.GetPolyDataOutput().CopyStructure(input)

surfaceF.GetPolyDataOutput().SetPoints(newPts)

surfaceF.GetPolyDataOutput().GetPointData().SetScalars(derivs)

surfaceF.SetExecuteMethod(surfaceFunction)

- Warp the surface (by wiring a warp filter to the output of the programmable filter):

# We warp the plane based on the scalar values calculated above

warp = vtk.vtkWarpScalar()

warp.SetInputConnection(surfaceF.GetOutputPort())

warp.XYPlaneOn()

- Create the mapper (which will interpret the scalar data in the source as a colour by default) and tell it to set the brightest/darkest to the maximum/minimum height of the terrain:

# Set the range of the colour mapper to the function min/max we used to generate the terrain.

mapper = vtk.vtkPolyDataMapper()

mapper.SetInputConnection(warp.GetOutputPort())

mapper.SetScalarRange(-1, 1)

- Lastly, set the terrain to a wireframe (strictly optional but it makes it look all retro 1990’s-ish), and assign the mapper to the ‘SceneObject’ actor so that it is drawn:

# Make our terrain wireframe so that it doesn't occlude the whole scene

self.vtkActor.GetProperty().SetRepresentationToWireframe()

# Finally assign this to the parent class actor so that it draws.

self.vtkActor.SetMapper(mapper)

To add it to the world in ‘bot_vis_main.py’, the same method as before is used (this is pretty much a standard, however here we provide it with a size parameter – set to 100 here):

...

# Create our new scene objects...

terrain = Terrain.Terrain(renderer, 100)

...

Building Sensor Displays

The final addition to the scene will be some sensor displays for the bot. In this project we are assuming that we have a video feed and a LIDAR feed from the bot, and we would like to represent both in the 3D environment.

Each is built in the same way as the previous examples – i.e. inheriting the ‘SceneObject’ class – however they both add a slight wrinkle in the implementation:

- The video screen feed will include a video feed texture (briefly discussed in the previous sections)

- The LIDAR feed will make use of a point cloud to draw the depth image

A Video Feed Screen

The video screen feed code can be found in the ‘CameraScreen.py’ file, which is located in the ‘scene’ project folder.

Here is a quick breakdown of the code:

- Create a plane source as was done with terrain:

...

def __init__(self, renderer, screenDistance, width, height):

'''

Initialize the CameraScreen model.

'''

# Call the parent constructor

super(CameraScreen,self).__init__(renderer)

# Create a plane for the camera

# Ref: http://www.vtk.org/doc/nightly/html/classvtkPlaneSource.html

planeSource = vtk.vtkPlaneSource()

- The plane will be oriented on the XZ axes, we would like to ‘push’ it a little along the Z-axis so that when positioned on the bot, it will be in front of it (by ‘screenDistance’ units):

# Defaults work for this, so just push it out a bit

#planeSource.Push(screenDistance)

- Use a transform again to scale it to the specified video size, both parameters for the ‘CameraScreen.py’ constructor, and flip it so that it is not drawn mirrored:

# Transform scale it to the right size

trans = vtk.vtkTransform()

trans.Scale(width, height, 1)

trans.Translate(0, 0, screenDistance)

trans.RotateY(180) # Effectively flipping the UV (texture) mapping so that the video isn't left/right flipped

transF = vtk.vtkTransformPolyDataFilter()

transF.SetInputConnection(planeSource.GetOutputPort())

transF.SetTransform(trans)

- Read in a texture:

# Create a test picture and assign it to the screen for now...

# Ref: http://vtk.org/gitweb?p=VTK.git;a=blob;f=Examples/Rendering/Python/TPlane.py

textReader = vtk.vtkPNGReader()

textReader.SetFileName("../scene/media/semisortedcameralogo.png")

self.cameraVtkTexture = vtk.vtkTexture()

self.cameraVtkTexture.SetInputConnection(textReader.GetOutputPort())

self.cameraVtkTexture.InterpolateOn()

- Lastly, create a mapper (same as the terrain example), and assign the texture and the mapper to the actor:

# Finally assign the mapper and the actor

planeMapper = vtk.vtkPolyDataMapper()

planeMapper.SetInputConnection(transF.GetOutputPort())

self.vtkActor.SetMapper(planeMapper)

self.vtkActor.SetTexture(self.cameraVtkTexture)

Next, we need to edit the bot class. Create a camera and assign it as one of the children of the bot (remember that ‘SceneObject.py’ have position- and orientation-bound children). This is done inside the ‘Bot. __setupChildren()’ method:

...

def __setupChildren(self, renderer):

'''

Configure the children for this bot - camera and other sensors.

'''

# Create a camera screen and set the child's offset.

self.camScreen = CameraScreen.CameraScreen(renderer, 3, 4, 3)

self.camScreen.childPositionOffset = [0, 2.5, 0]

# Add it to the bot's children

self.childrenObjects.append(self.camScreen)

...

Just a quick note if you want to constantly update the video feed: If you change the texture for the camera (‘CameraScreen.cameraVtkTexture’) it will then draw the updated texture on the video feed screen. You might have to call the source’s update method to let VTK know that the data has changed.

A LIDAR Feed Visualization

The last sensor to be added is the LIDAR feed. This is a little different from the rest of the items – should be regarded as an optional extra – because it had a bit more body than the other cases. It can be found in ‘LIDAR.py’ in the scene subfolder.

In this case:

- It is assumed that we will be provided with a 2D matrix of points, each point indicating a laser measurement of the depth at that specific point

- We have to convert this 2D matrix from polar coordinates and project it as a hemispherical depth map in world-space

- The scanner will measure both along the horizontal as well as the vertical:

- The horizontal axis is scanned from a minimum angle of ‘minTheta’ to a maximum angle of ‘maxTheta’, with ‘numThetaReadings’ readings in-between

- The vertical axis is scanned from a minimum angle of ‘minPhi’ to a maximum angle of ‘maxPhi’, with ‘numPhiReadings’ readings in-between

- Each depth reading will be colour-coded (similar to the terrain), with a minimum and max depth value of ‘minDepth’ and ‘maxDepth’ respectively

- We need to set the depth values to some initial value until we get the first scan, this is called ‘initialValue’

The bulk of the work is done in the initialization method, which is show below. It takes in these parameters and generates the point cloud data structures. For this template I was playing around with converting the 2D measurement matrix to the visualized hemisphere, so I have provided a method ‘UpdatePoints()’ that takes in a NumPy matrix and puts all the points in the right place for the visualizing.

A quick discussion on the ‘LIDAR.py’ code:

- The constructor initializes the data structures used by the point cloud (the points, depth values, and cells used to represent the data):

...

def __init__(self, renderer, minTheta, maxTheta, numThetaReadings, minPhi, maxPhi, numPhiReadings, minDepth, maxDepth, initialDepth):

...

# Cache these parameters

self.numPhiReadings = numPhiReadings

self.numThetaReadings = numThetaReadings

self.thetaRange = [minTheta, maxTheta]

self.phiRange = [minPhi, maxPhi]

# Create a point cloud with the data

self.vtkPointCloudPoints = vtk.vtkPoints()

self.vtkPointCloudDepth = vtk.vtkDoubleArray()

self.vtkPointCloudDepth.SetName("DepthArray")

self.vtkPointCloudCells = vtk.vtkCellArray()

self.vtkPointCloudPolyData = vtk.vtkPolyData()

# Set up the structure

self.vtkPointCloudPolyData.SetPoints(self.vtkPointCloudPoints)

self.vtkPointCloudPolyData.SetVerts(self.vtkPointCloudCells)

self.vtkPointCloudPolyData.GetPointData().SetScalars(self.vtkPointCloudDepth)

self.vtkPointCloudPolyData.GetPointData().SetActiveScalars("DepthArray")

- Points, with temporary values of [1,1,1], are created for each ‘pixel’ in the scan and are pushed into the data structure. The update method is then called with the initial depth value to calculate the correct real-world positions of the measurements:

# Build the initial structure

for x in xrange(0, self.numThetaReadings):

for y in xrange(0, self.numPhiReadings):

# Add the point

point = [1, 1, 1]

pointId = self.vtkPointCloudPoints.InsertNextPoint(point)

self.vtkPointCloudDepth.InsertNextValue(1)

self.vtkPointCloudCells.InsertNextCell(1)

self.vtkPointCloudCells.InsertCellPoint(pointId)

# Use the update method to initialize the points with a NumPy matrix

initVals = numpy.ones((numThetaReadings, numPhiReadings)) * initialValue

self.UpdatePoints(initVals)

- Note that I’m using Numpy here, which you will need as part of your Python components – I’m pretty sure it’s already installed, but please post a message if it doesn’t work.

- A normal mapper is defined and connected to the ‘SceneObject’ actor as above. It is set to use the point cloud poly data as an input. The scalar values of the point cloud structure will be used for the colours (representing depth), so the range is set for the mapper to present the right depth colour for each scalar value:

# Now build the mapper and actor.

mapper = vtk.vtkPolyDataMapper()

mapper.SetInput(self.vtkPointCloudPolyData)

mapper.SetColorModeToDefault()

mapper.SetScalarRange(minDepth, maxDepth)

mapper.SetScalarVisibility(1)

self.vtkActor.SetMapper(mapper)

- Lastly, a template for an update method is included in the code. Pass in a 2D matrix of depth measurements and it will convert them to absolute measurements in the world. This is done by converting the polar coordinate to a world-space coordinate:

def UpdatePoints(self, points2DNPMatrix):

'''Update the points with a 2D array that is numThetaReadings x numPhiReadings containing the depth from the source'''

for x in xrange(0, self.numThetaReadings):

theta = (self.thetaRange[0] + float(x) * (self.thetaRange[1] - self.thetaRange[0]) / float(self.numThetaReadings)) / 180.0 * 3.14159

for y in xrange(0, self.numPhiReadings):

phi = (self.phiRange[0] + float(y) * (self.phiRange[1] - self.phiRange[0]) / float(self.numPhiReadings)) / 180.0 * 3.14159

r = points2DNPMatrix[x, y]

# Polar coordinates to Euclidean space

point = [r * sin(theta) * cos(phi), r * sin(phi), r * cos(theta) * cos(phi)]

pointId = y + x * self.numPhiReadings

self.vtkPointCloudPoints.SetPoint(pointId, point)

self.vtkPointCloudCells.Modified()

self.vtkPointCloudPoints.Modified()

self.vtkPointCloudDepth.Modified()

Lastly, the LIDAR visualization is also added to the children of the bot in ‘Bot.py’. The visualization is initialized with the discussed parameters (which depend on the specs of the theoretical scanner, these were chosen just for testing) and the child object is moved up to sit at head-height of the bot:

...

# Create the LIDAR template and set it to the child's offset as well

self.lidar = LIDAR.LIDAR(renderer, -90, 90, 180, -22.5, 22.5, 45, 5, 15, 5)

self.lidar.childPositionOffset = [0, 2.5, 0]

# Add it to the bot's children

self.childrenObjects.append(self.lidar)

Summary

That’s pretty much a wrap for the 3D modelling portion of this project! The next post will deal with interaction and custom cameras so that we can bind cameras to bots in both 1st and 3rd person. What the bot sees, we see. I’ll also leverage a bit of the design principles from the ARX project to make it more natural for users to navigate in the environment.

…By the way, this is becoming a CodeProject article in the next few weeks, so if you think anything might need to be added before it’s moved across, please let me know!

– Sam